It seems there’s hardly a profession left untouched by the question: “Will AI take our jobs?”. Testers know this feeling better than most, as this conversation has turned especially loud among them.

But the truth, as always, is more complicated. AI really can do a lot, yet it still lacks something that every experienced tester has. That quiet sense when something just feels off, even if the logs look fine.

That’s why researchers recently decided to check who’s really better at finding bugs, people, or AI. The results turned out to be surprising, perhaps even reassuring for humans.

The testing ground

To keep the duel fair, both contenders stepped onto the same testing ground. Researchers collected 120 real academic websites built by people with different coding habits. Perfect territory for hidden bugs.

The AI tester went first. It crawled through each site, clicking buttons, checking links, measuring layout shifts, and logging every usability glitch it could find. In minutes, it produced thick reports packed with warnings, screenshots, and metrics.

Then came the humans. A team of QA engineers combed through the same sites, comparing notes with the AI’s findings. On a smaller batch of 80 websites, they did it all manually. That list became the reference point, the ground truth of what was actually broken.

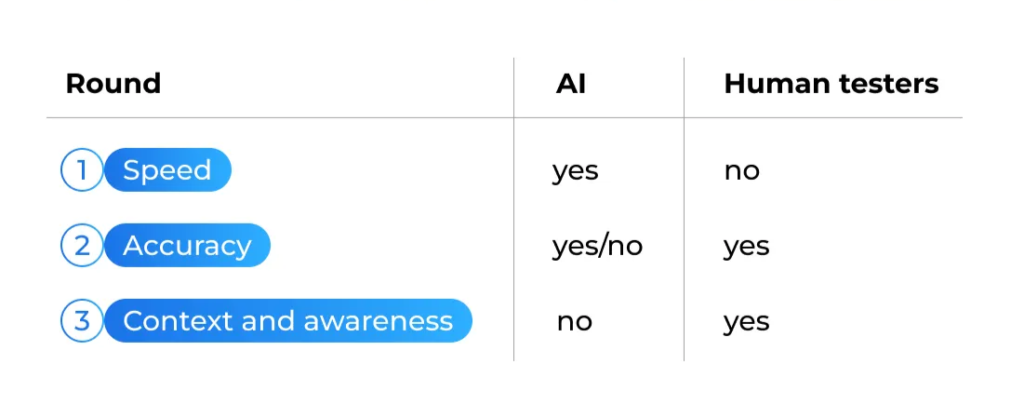

Round one: when AI races ahead

While human testers were still opening the first page, the AI had already finished scanning its 10th site. While the AI worked linearly and predictably, people had to stop, check, recheck, and document each step. That’s how quality control is supposed to work, but it’s also what slows it down. Speed gives AI the upper hand, as it doesn’t tire or get distracted. So, what exactly made it faster?

- Automation of repetitive checks Forms, buttons, and navigation links were tested automatically without human supervision.

- Instant report generation AI recorded every issue as it was found, without waiting for manual review.

- Parallel processing Unlike a human, AI could analyze dozens of pages at once, with consistent attention to detail.

So yes, AI crossed the finish line first. But as the reports started coming in, the testers noticed something strange. Not every “bug” the AI found was real. The race for accuracy was just beginning.

Round two: what AI gets right (and wrong)

Through 120 websites, AI found 29 real usability issues confirmed by experts. Most of these were subtle, the kind humans might miss after hours of repetition:

- Broken or misdirected links The AI flagged links that didn’t lead where they promised. For instance, a “Read more” button under a project description opened the wrong paper. A small detail, but a clear UX flaw.

- Rendering errors It caught missing or distorted images that broke page layout, something that damages trust in a professional portfolio.

- Logical inconsistencies On one of the course pages, AI noticed a “Fall break” scheduled in the middle of the spring semester. This is one of the slips that seems minor, yet instantly breaks the logic of the page.

Yet even perfect aim means little if you don’t know where to shoot. AI can point at flaws all day, but only people know which ones actually break the experience.

Round three: the missing awareness

Let’s not forget that the main task of testing isn’t just to find bugs, but to confirm that the product performs as designed, in line with its quality and technical specifications. To do that, QA engineers master tools like Postman, DevTools, Charles, and Kibana, learn client-server architecture, work with databases, and write test documentation. Over time, this builds not just a toolkit, but an understanding, a mental model of WHY the system behaves the way it does.

AI, on the other hand, is great at removing repetitive work, measuring loading speeds faster than any person. But it misses one crucial thing: awareness. It can see that something looks wrong, but does not always understand why it’s wrong:

- 85% of the bugs flagged by AI turned out to be false positives. It often mistook its own technical limitations, like being blocked from opening a PDF, for actual website defects.

- Some errors came from wrong assumptions, where the AI lacked enough context. It might confuse a delayed element for missing content or treat a design choice as a layout error.

- AI failed to identify all 32 real bugs that researchers have manually detected. It flagged only 19, achieving roughly 59% coverage. Most of the missed defects were buried deep in multi-level navigation or hidden behind dynamically loaded content. Because AI ran only one scan per site, it often stopped before reaching those sections.

Humans, however, see the system as a whole. They can trace behavior, sequence, and user expectations. They spot issues not because the code says so, but because something breaks the user’s path. AI can test thousands of buttons in seconds. But only a human can tell which one truly gets in the way.

The verdict: not a rival, but a partner

AI runs on input, rules, and instructions. It can’t step outside the parameters it’s given, as it doesn’t question, reason, or see the bigger picture. In testing, that limitation matters. As the concept of context-driven testing says, “the value of any practice depends on its context.” And context is something AI still can’t process, including contradictions, intent, or meaning.

That’s why neural networks won’t replace testers. They lack awareness, critical thinking, creativity, and emotional sense. But for testers who learn to use AI wisely, it becomes not a rival, but a powerful amplifier of human insight.